Connecting to Postgres and Create a Table

Here I have created ETL Pipeline in a Postgres docker container. To install and run postgres container you can follow Postgres Connection with Docker.

Create a Database in postgres and a table where we will load data at the end.

psql --username=postgres --host=localhost /c template1; CREATE TABLE access_log(timestamp TIMESTAMP, latitude float, longitude float, visitor_id char(37));After creating database name as template1 and table name as access_log. We will quit the postgres shell.

Create a new file named shell-access-log.sh.

touch shell-access-log.shDownload the gzip file and unzip file.

wget "https://cf-courses-data.s3.us.cloud-object-storage.appdomain.cloud/IBM-DB0250EN-SkillsNetwork/labs/Bash%20Scripting/ETL%20using%20shell%20scripting/web-server-access-log.txt.gz" gunzip -f web-server-access-log.txt.gz

4. Extract Phase

In shell-access-log.sh file write a script to extract the data from gz file and get .txt file.

The columns in the web-server-access-log.txt file are delimited by ‘#’.

Save the delimited separated file in extracted-data.txt.

# Extract phase

echo "Extracting data"

# Extract the columns 1 (timestamp), 2 (latitude), 3 (longitude) and

# 4 (visitorid)

cut -d# -f1-4 web-server-access-log.txt > extracted-data.txt

Save the file and run the bash script command. bash shell-access- log.sh

Check the extracted.txt file

cat extracted.txt

5. Transform Phase

Perform a transform in the txt file to CSV format.

The original “#” delimiter set apart the extracted columns.

We need to convert this into a “,” delimited file name as transformed.csv.

# Transform phase

echo "Transforming data"

# read the extracted data and replace the colons with commas.

sed 's/#/,/g' extracted-data.txt > transformed.csv

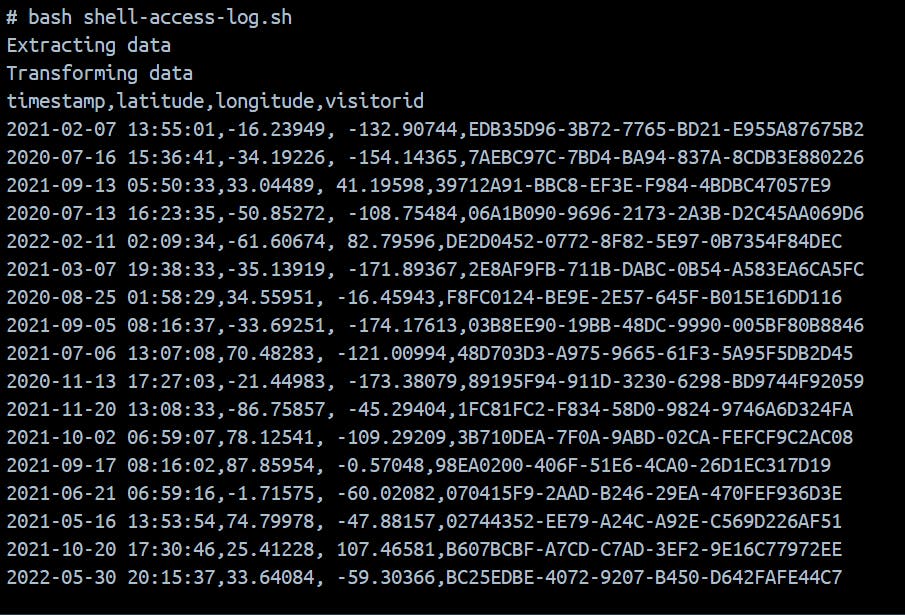

Save the file shell-access-log.sh and run it --- bash shell-access-log.sh

Check the transformed.csv to validate the data.

cat transformed.csv

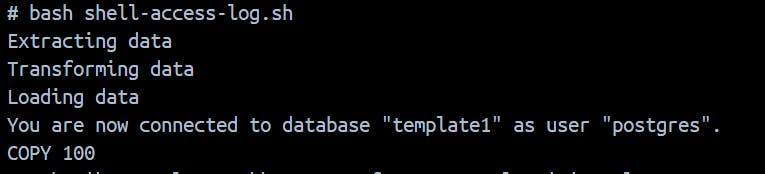

6. Load Phase

Load the data into the table access_log in PostgreSQL.

PostgresSQL copy command will copy data from csv to table in postgres.

Syntax to copy data :-

COPY table_name from 'filename' DELIMITERS 'delimiter_character' FORMAT;

# Load phase

echo "Loading data"

# Send the instructions to connect to 'template1' and

# copy the file to the table 'access_log' through the command pipeline.

echo "\c template1;\\COPY access_log FROM 'transformed.csv'

DELIMITERS ',' CSV HEADER;" | psql --username=postgres --

host=localhost

Run Final script ~ bash shell-access-log.sh

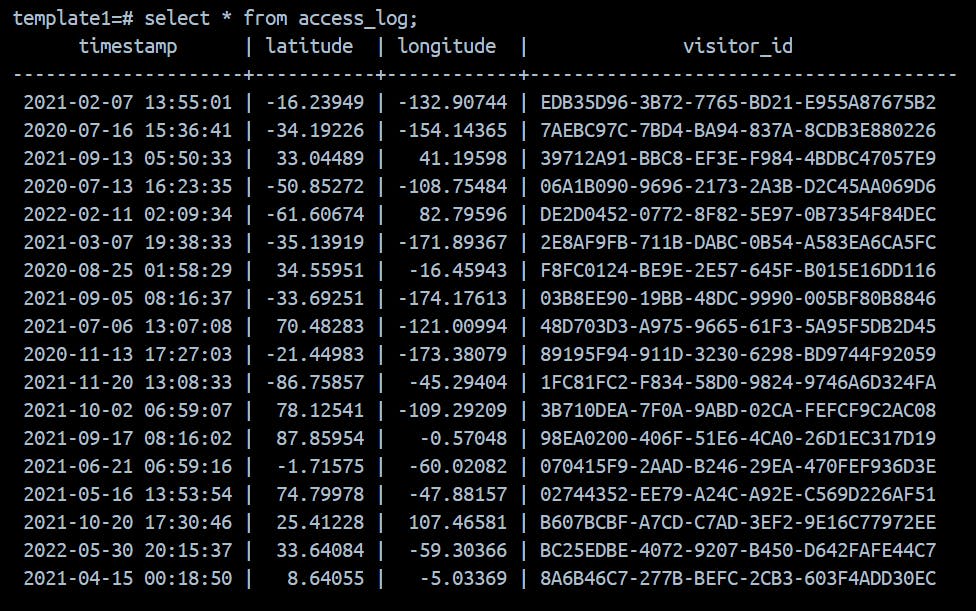

Run the below command to populate the data in table.

psql --username=postgres --host=localhost

\c template1

select * from access_log;